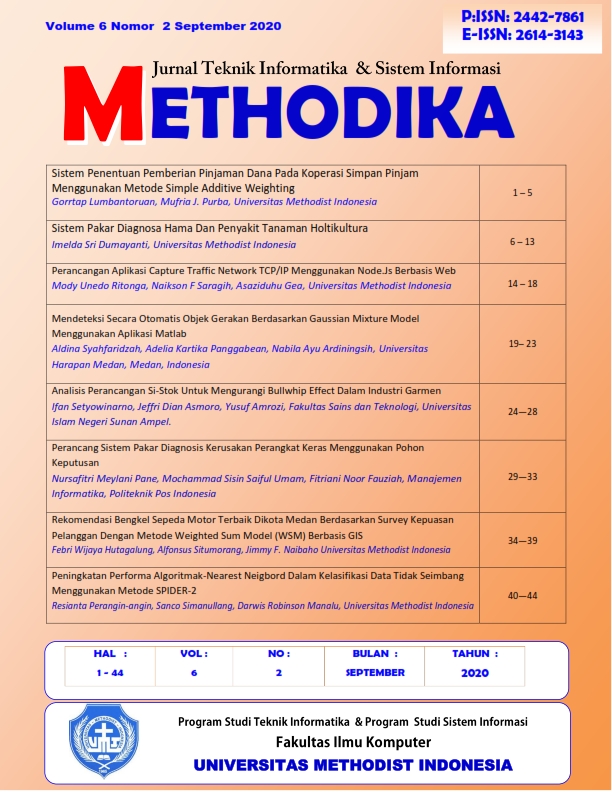

PENINGKATAN PERFORMA ALGORITMAK-NEAREST NEIGBORD DALAM KELASIFIKASI DATA TIDAK SEIMBANG MENGGUNAKAN METODE SPIDER-2

DOI:

https://doi.org/10.46880/mtk.v6i2.426Keywords:

Data Mining, SPIDER-2, KNN, Unbalancing DataAbstract

Class imbalance has become an ongoing problem in the field of Machine Learning and Classification. The group of data classes that are less known as the minority group, the other data class group is called the majority group (majority). In essence real data, data that is mined directly from the database is unbalanced. This condition makes it difficult for the classification method to perform generalization functions in the machine learning process. Almost all classification algorithms such as Naive Bayes, Decision Tree, K-Nearest Neighbor and others show very poor performance when working on data with highly unbalanced classes. The classification methods mentioned above are not equipped with the ability to deal with class imbalance problems. Many data processing methods are often used in cases of data imbalance, in this case research will be carried out using the Spider2 method. In this study, the Ecoli dataset was used, while for this study, 5 (five) different Ecoli datasets were used for each dataset for the level of data imbalance. After testing datasets with different levels of Inbalancing Ratio (IR), starting from the smallest 1.86 to 15.80, the results that explain that the KNN algorithm can improve its performance even better in terms of unbalanced data classification by adding the SPIDER- method 2 as a tool in dataset processing. In the 5 trials, the performance of the KNN algorithm can increase GM by 5.81% and FM 14.47% by adding the SPIDER-2 method to KNN.

References

. Ali, A., Shamsuddin, S. M., & Ralescu, A. L. (n.d.). Classification with class imbalance problem: A review. 31.

. Bria, A., Karssemeijer, N., & Tortorella, F. (2014). Learning from unbalanced data: A cascade-based approach for detecting clustered microcalcifications. Medical Image Analysis, 18(2), 241–252. https://doi.org/10.1016/j.media.2013.10.014

. Cordón, I., García, S., Fernández, A., & Herrera, F. (2018). Imbalance: Oversampling algorithms for imbalanced classification in R. Knowledge-Based Systems, 161, 329–341. https://doi.org/10.1016/j.knosys.2018.07. 035

. Department of Biological Sciences, BITS PILANI K K Birla Goa Campus, Zuarinagar, Vasco Da Gama, India, & Kothandan, R. (2015). Handling class imbalance problem in miRNA dataset associated with cancer. Bioinformation, 11(1), 6–10. https://doi.org/10.6026/97320630011006

. Farquad, M. A. H., & Bose, I. (2012). Preprocessing unbalanced data using support vector machine. Decision Support Systems, 53(1), 226–233. https://doi.org/10.1016/j.dss.2012.01.016

. Juan Carbajal-Hernández, J., Sánchez-Fernández, L. P., Hernández-Bautista, I., Medel-Juárez, J. de J., & Sánchez-Pérez, L. A. (2016). Classification of unbalance and misalignment in induction motors using orbital analysis and associative memories. Neurocomputing, 175, 838–850. https://doi.org/10.1016/j.neucom.2015.06.094

. Kothandan, R. (2015). Handling class imbalance problem in miRNA dataset associated with cancer. Bioinformation, 11(1), 6–10. https://doi.org/10.6026/97320630011006

. Lee, J., Wu, Y., & Kim, H. (2015). Unbalanced data classification using support vector machines with active learning on scleroderma lung disease patterns. Journal of Applied Statistics, 42(3), 676–689. https://doi.org/10.1080/02664763.2014.978270

. Li, C., & Liu, S. (2018). A comparative study of the class imbalance problem in Twitter spam detection. Concurrency and Computation: Practice and Experience, 30(5), e4281. https://doi.org/10.1002/cpe.4281

. Maldonado, S., López, J., & Vairetti, C. (2019). An alternative SMOTE oversampling strategy for high-dimensional datasets. Applied Soft Computing, 76, 380–389. https://doi.org/10.1016/j.asoc.2018.12.024

. Napierała, K., Stefanowski, J., & Wilk, S. (2010). Learning from Imbalanced Data in Presence of Noisy and Borderline Examples. In M. Szczuka, M. Kryszkiewicz, S. Ramanna, R. Jensen, & Q. Hu (Eds.), Rough Sets and Current Trends in Computing (Vol. 6086, pp. 158– 167). Springer Berlin Heidelberg. https://doi.org/10.1007/978-3-642-13529-3_18

.Qiong, G. (2016). An Improved SMOTE Algorithm Based on Genetic Algorithm for Imbalanced. 14(2), 12.

.Siringoringo, R. (2018). Klasifikasi Data Tidak Seimbang Menggunakan Algoritma Smote Dan K-Nearest Neighbor. 6.

.Sun, Y., Wong, A. K. C., & Kamel, M. S. (2009). CLASSIFICATION OF IMBALANCED DATA: A REVIEW. International Journal of Pattern Recognition and Artificial Intelligence, 23(04), 687–719. https://doi.org/10.1142/S0218001409007326

.D. R. Manalu, M. Zarlis, H. Mawengkang, and O. S. Sitompul, “Forest Fire Prediction in Northern Sumatera using Support Vector Machine Based on the Fire Weather Index,” AIRCC Publ. Corp., vol. 10, no. 19, pp. 187– 196, 2020, doi: 10.5121/csit.2020.101915.

.Wu, Q., Ye, Y., Zhang, H., Ng, M. K., & Ho, S.- S. (2014). ForesTexter: An efficient random forest algorithm for imbalanced text categorization. Knowledge-Based Systems, 67, 105–116. https://doi.org/10.1016/j.knosys.2014.06.004

Downloads

Published

How to Cite

Issue

Section

License

Copyright (c) 2020 Methodika

This work is licensed under a Creative Commons Attribution 4.0 International License.